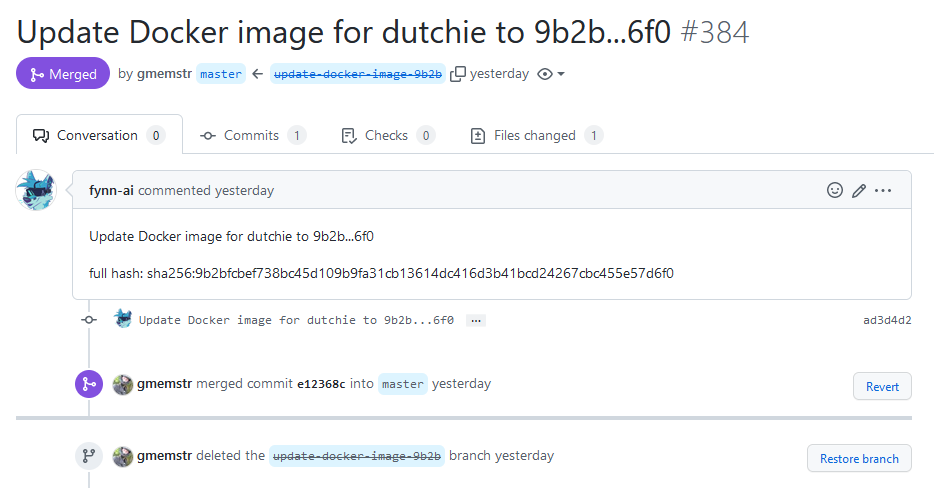

Compare commits

2 commits

dcbd398d02

...

9b43d5a783

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

9b43d5a783 | ||

|

|

c8481c9d4f |

|

|

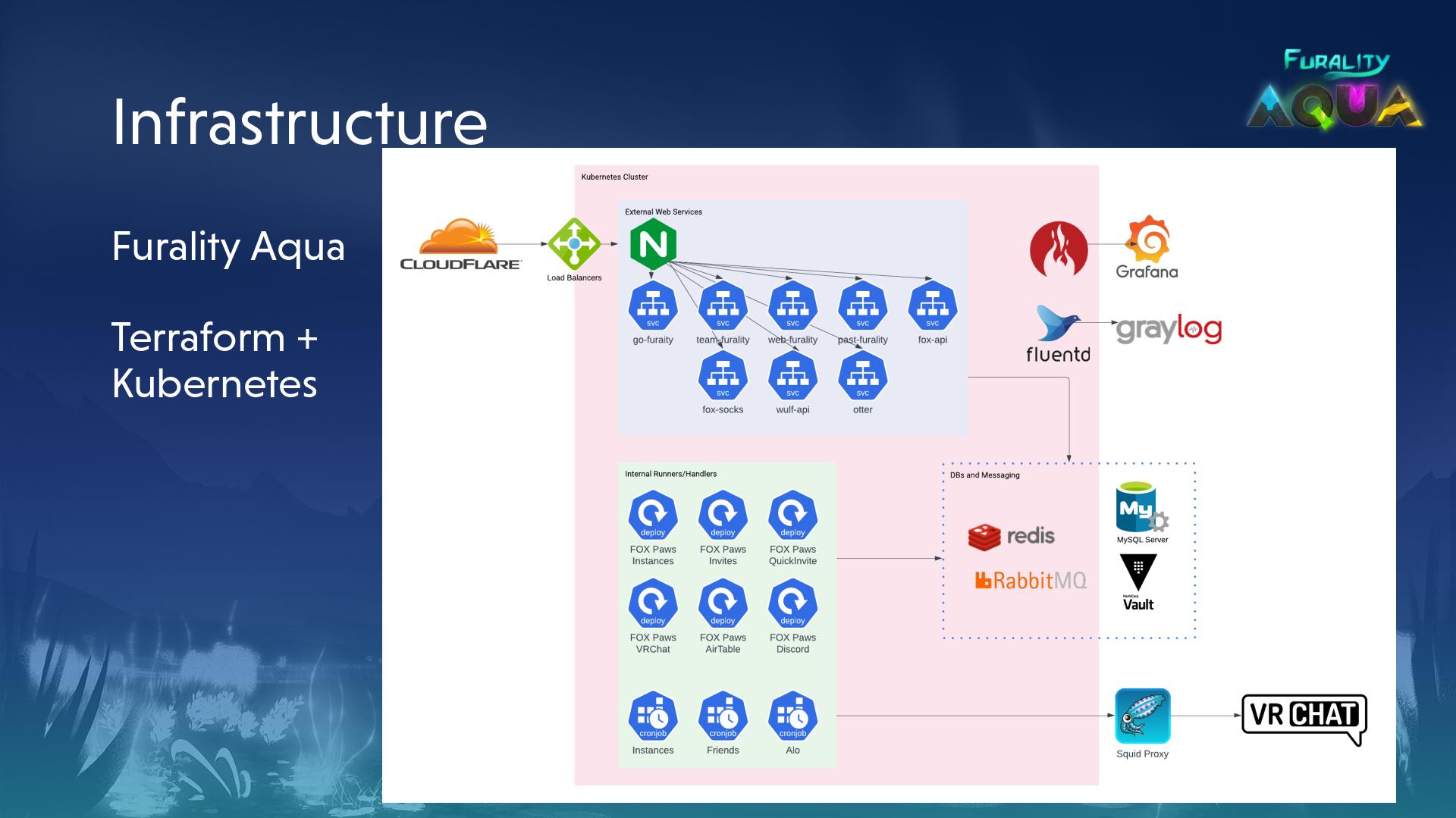

@ -31,7 +31,7 @@ what our infrastructure used to look like [here during the DevOps panel

|

|||

at Legends](https://youtu.be/vmmyzFFn_Uo)), so I joined at a really

|

||||

opportune time for influencing the direction we took.

|

||||

|

||||

|

||||

|

||||

|

||||

While the infrastructure team is also responsible for maintaining the

|

||||

streaming infrastructure required to run the convention club, live

|

||||

|

|

@ -63,7 +63,7 @@ conclusion, let's dive into the points a little deeper, talk about how

|

|||

Kubernetes addresses each issue, and maybe touch on *why you wouldn't*

|

||||

want to use Kubernetes.

|

||||

|

||||

|

||||

|

||||

|

||||

### Aggresive auto scaling, both up and down

|

||||

|

||||

|

|

@ -137,9 +137,9 @@ requiring some human intervention to not only merge the changes but also

|

|||

apply the changes through Terraform Cloud, but it was better than

|

||||

nothing, and let us keep everything in Terraform.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

We also built out Dutchie, a little internal tool that gates our API

|

||||

documentation behind OAuth and rendering it in a nice format using

|

||||

|

|

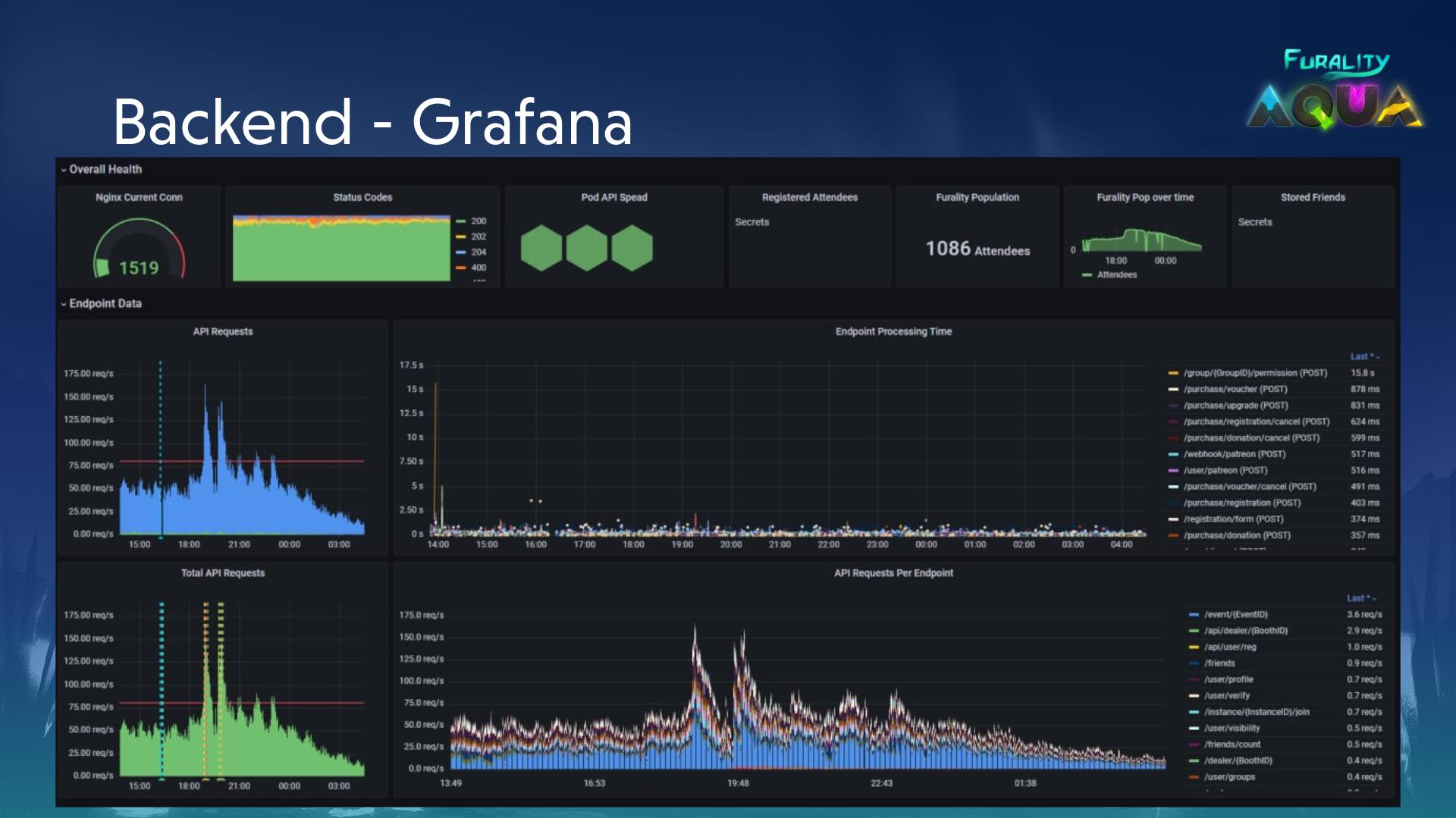

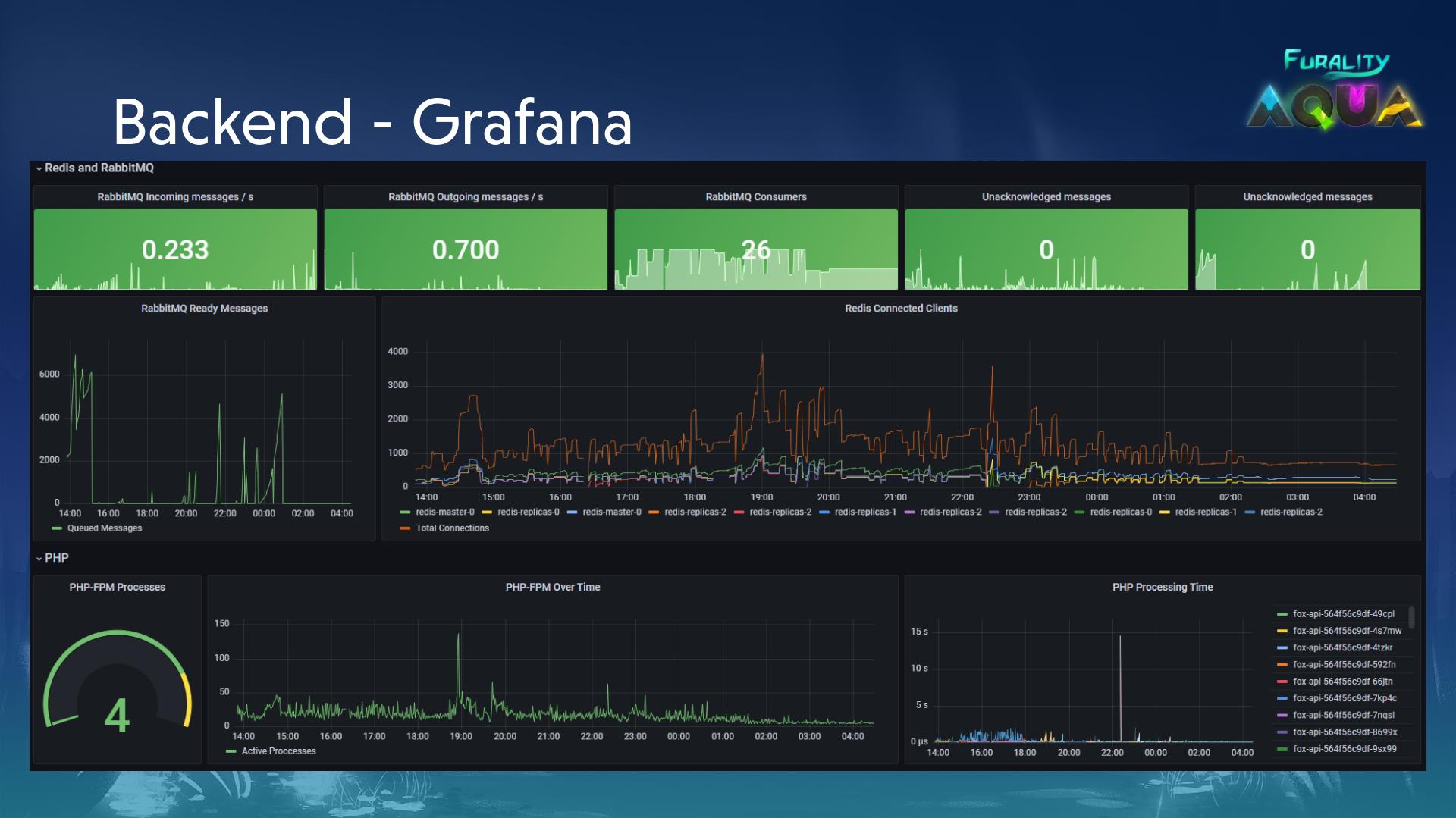

@ -156,9 +156,9 @@ pod for php-fpm metrics) that feed into the relevant services. From

|

|||

there we can put up pretty dashboards for some teams and really verbose

|

||||

ones for ourselves.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### What could have been done better?

|

||||

|

||||

|

|

|

|||

|

|

@ -28,15 +28,15 @@ I've deliberately avoided setting up k3s on the NAS, either as a self contained

|

|||

Because a lot of my servers (and desktop!) run NixOS, you can check out my exact configurations [[https://git.sr.ht/~gmem/infra/tree/trunk/item/krops][on sourcehut]] - I deploy using [[https://github.com/krebs/krops][krops]], but the configurations themselves aren't krops specific. My machine naming scheme is mostly a case of me picking a random city when setting up the hardware. Seattle is my Pi 4B, Vancouver is the NAS, Kelowna is the Pi 3B+ (RIP), and London is my desktop.

|

||||

|

||||

#+attr_html: :alt Nas while building it :title NAS while building it

|

||||

[[/images/nas-build.png]]

|

||||

[[https://cdn.gabrielsimmer.com/images/nas-build.png]]

|

||||

|

||||

|

||||

#+attr_html: :alt Another shot of the NAS :title Another shot of the NAS

|

||||

[[/images/nas-complete.png]]

|

||||

[[https://cdn.gabrielsimmer.com/images/nas-complete.png]]

|

||||

|

||||

|

||||

#+attr_html: :alt A collection of Rasbperry Pis in a case :title A collection of Rasbperry Pis in a case

|

||||

[[/images/pis-nas.png]]

|

||||

[[https://cdn.gabrielsimmer.com/images/pis-nas.png]]

|

||||

|

||||

|

||||

*** An aside: Nix and NixOS

|

||||

|

|

|

|||

|

|

@ -22,7 +22,7 @@ Going through the steps of removing any and all possible interruptions to a netw

|

|||

I swiftly setup a meeting between my forehead and my desk (the two are good friends), and ran some speedtests just to be sure. Indeed, the NAS was getting closer-to-gigabit speeds I would expect, and so were other devices on my network.

|

||||

|

||||

#+attr_html: :alt A graph showing around 950Mb/s from an internet speedtest

|

||||

[[/images/speedtest-grafana.png]]

|

||||

[[https://cdn.gabrielsimmer.com/images/speedtest-grafana.png]]

|

||||

|

||||

My theory is that the Quality of Service, App Analysis and VPN Fusion all being enabled was too much for my poor router, and /it/ became the bottleneck in the chain. Unsuprisingly disabling all these features also massively reduced the memory and CPU usage. I suppose the moral of the story is that when in doubt, check how much work your router is doing when you use the network - it could be passing through a lot of layers before getting to its destination, each layer requiring a bit of compute that eventually compounds into a severe bottleneck.

|

||||

|

||||

|

|

|

|||

36

posts/more-infra-shenanigans.org

Normal file

36

posts/more-infra-shenanigans.org

Normal file

|

|

@ -0,0 +1,36 @@

|

|||

#+title: More Infrastructure Shenanigans

|

||||

#+date: 2023-12-24

|

||||

|

||||

#+ATTR_HTML: :alt My fursona doing important server work.

|

||||

[[https://cdn.gabrielsimmer.com/images/close-crop-banned.png]]

|

||||

|

||||

I have been /busy/ with my infrastructure since my last post. Notably, the following has happened: I changed how my offsite backups are hosted, upgraded my NAS's storage and downgraded the GPU, rack mounted stuff, built a Proxmox box, dropped Krops for deploying my machines...

|

||||

|

||||

It's a lot, so probably worth breaking it down. Let's start simple - I upgraded my NAS from four 4TB Ironwolf Pro drives to three 16TB Seagate Exos drives. Roughly trippling my storage even with the Raid-Z1 configuration, it's not all upside - unfortunately these new drives are significantly louder, and caused my poor little NAS to produce much more noise and rattle. This is mostly because of the choice of case for rack mounting - rather cheap, but gets the job done. We largely solved the issue by adding foam tape in strategic places, and adding some tape to the front panel bits that were rattling. It felt jank at first but works really well.

|

||||

|

||||

#+attr_html: :alt The new and improved, and taped, NAS.

|

||||

[[https://cdn.gabrielsimmer.com/images/infra-shenanigans-2.jpg]]

|

||||

|

||||

As part of our homelab upgrades, we also opted to start rack-mounting the machines. We picked up what seemed like a reasonable rack, only to quickly realise that racks designed for AV equipment are /not/ the same as rack designed for compute. This was rather startling - after all, a rack is a rack, right? Apparently not, and after several failed attempts to find actual rails that fit we ended up with "rails" that are more shelf-like than anything. They work, and ended up working well with the foam we used for isolating the NAS, but sliding machines out is a little more rough. The rack itself is unfortunately in need of cable management, which I will eventually get to when I can bring myself to power everything down and ruin sweet, sweet uptime.

|

||||

|

||||

The other machine we put in the rack, besides my small Raspberry Pi Kubernetes cluster, is a new Proxmox virtualisation machine. It has some reasonable specs for running multiple virtual machines, including an AMD Ryzen 7 5700X, 64GB of memory, an RTX 2080 SUPER and GTX 1070, and a 1TB and 500GB SSD. As I explained in my [[https://gabrielsimmer.com/blog/messing-with-proxmox][last post about Proxmox]], the goal of this box is to serve as a stream machine for various online events, which requires the use of Windows and GPUs for the software and encoding used. With our newly upgraded internet connection, it works really well, after we pulled out the extra 32GB of memory I added to get to 96GB of memory. Unfortunately, this caused all the memory to run at 2133MHz, rather than the 3600MHz the sticks were rated for. Right now it's running two Windows VMs for streaming (either vMix or OBS depending on the event) with one GPU assigned to each, a small machine for Immich's machine learning component, and a VM for various other things I need a machine for. I would like to give it more memory, and buff the core count, but it managed to handle a VR event with both Windows VMs working (one streaming video to the internet, the other running VRChat, ingesting some video feeds and streaming to Twitch while recording locally).

|

||||

|

||||

#+attr_html: :alt A fun server with two GPUs for running streams.

|

||||

[[https://cdn.gabrielsimmer.com/images/infra-shenanigans-3.jpg]]

|

||||

|

||||

One downside of opting for Proxmox is I can't rely on Nix to configure and manage the machine. But for everything else, I've made moves to make my life much easier. I ported all my NixOS configurations to Nix flakes, and opted for [[https://github.com/MatthewCroughan/nixinate][nixinate]] to handle the deployment. It's a small wrapper around the existing Nix flake remote functionality, and has been a massive improvement over krops. For one, machines don't need their own checkout of the nixpkgs repository, which in turns means I don't need to install git manually prior to deploying from the repository. Nixinate also does some magic to work even when SSH disconnects, which is great when updating a system and the network needs to restart (Tailscale, NetworkManager, whatever it may be).

|

||||

|

||||

You may have noted the 5th generation Ryzen CPU in the Proxmox machine. I opted to, rather than buying new hardware for it, use the hardware that had been living in my primary workstation and instead upgrade that. Very worthwhile - I grabbed a Ryzen 7 7700X, Radeon 7900XT, Samsung 980 Pro 2TB, and 32GB of DDR5 memory at 6200MHz. There is a /notable/ speed improvement, not just in games (where I can now crank everything) but also in day to day development. While I don't do much software development these days, focusing on ops, what software I do build binaries of are either large projects from others or written in Rust (often both). With a bit of tinkering, and the massive be quiet! CPU cooler, it can comfortably run at about 5.5GHz depending on the task.

|

||||

|

||||

#+attr_html: :alt A look inside my new workstation rig.

|

||||

[[https://cdn.gabrielsimmer.com/images/infra-shenanigans-1.jpg]]

|

||||

|

||||

Pivoting a bit, let's talk about connectivity. I've relied a lot on Tailscale to keep my devices connected to each other and reach the services I self host without exposing them to the internet. But as I adopt more services into my home I find some cases where I do want to expose something, especially for something like disaster recovery. For a while, I relied on a DIY solution I detailed [[https://gabrielsimmer.com/blog/diy-tailscale-funnel][here]], but it had a few downsides. First, the tunnel I relied on connected to everything over Tailscale, which proved to be problematic when the proxy itself had its keys expire while proxying my Authentik instance, which is used for authenticating with Tailscale. I finally caved, and moved my tunnels from my DIY solution to Cloudflare Tunnels. Admittedly, I had already been using it for [[https://gabrielsimmer.com/blog/couchdb-as-a-backend][my partner's website]], using the tunnel to expose the backend CouchDB instance then using CloudFront for the caching portion. Why not use Cloudflare directly? I'm not sure - this was likely setup around the time I was trying to get out of my comfort zone and play with the other offerings out there, so kept one foot out the door. Regardless, the free offering is too good to ignore the pull of sometimes. There were some nice advantages to moving though - I can remove reliance on Tailscale functioning for some core services, and I can add OIDC authentication in from of some of the services so I can still reach them from non-Tailscale devices but not worry about them being exposed to the internet.

|

||||

|

||||

As part of this move (back?) to Cloudflare Tunnels, I also reassessed my usage of the platform as a whole. Since I started using Cloudflare it has grown a lot, and offers way more than just DNS and proxying. While considering all of this, I was hired as a Site Reliability Engineer at Cloudflare, which gave me a very good excuse to dive in and start fully leveraging the platform. So far I've transplanted [[https://gmem.ca][gmem.ca]] and associated endpoints & files, along with [[https://fursona.gmem.ca][fursona.gmem.ca]]. [[https://artbybecki.com/][artbybecki.com]] was moved to Cloudflare pages a little while ago, but I removed some of the hops and services it relied on, using Cloudflare's own image resizing and optimisation offerings (not Cloudflare Images) for serving the galleries - which has made it much, /much/ faster. This website is still being served up by Fly.io directly. While I haven't finished the gossip protocol based content distribution, and at this rate it's unlikely that I will, I still like having it as a place to play with Rust and interesting ideas, so it's unlikely it will ever proxy through Cloudflare. With that said, it /is/ likely that the images served in this blog do get uploaded to an R2 bucket or the like so I don't have to think about them.

|

||||

|

||||

/As a quick disclaimer:/ Since I've been hired at Cloudflare, it's likely that I will mention their product(s) more often here and elsewhere. When I join a new company, I tend to explore the bits and pieces of their tech stack in my own time, and Cloudflare does a lot of dogfooding. So inevitably I will be talking about Cloudflare more often.

|

||||

|

||||

So where do I go from here?

|

||||

|

||||

The number one priority now is getting a custom router built up. I've played with opnsense enough at this point to feel comfortable with it, so I hope to eventually replace our Asus RT-AX82U with some custom hardware and one or two wireless access points. The main advantages will be the ability to add 2.5GBit capability to my network (which will also require a new switch - oh no! How dreadful!), and VLANs to hopefully seperate the virtual machines (and other devices). The NAS will also see some minor upgrades over time, but unlikely to be anything drastic. I'll also be evaluating my use of Tailscale, although replacing it is out of the question since I depend so much on it - Tailscale the company being VC backed is always in the back of my mind, but I haven't found anything that does exactly what it does for the same price and convenience. But who knows! Tech moves fast and I'm always eager to experiment.

|

||||

|

|

@ -9,27 +9,27 @@ But before diving in that keyboard, let's get into my history with keyboards. My

|

|||

|

||||

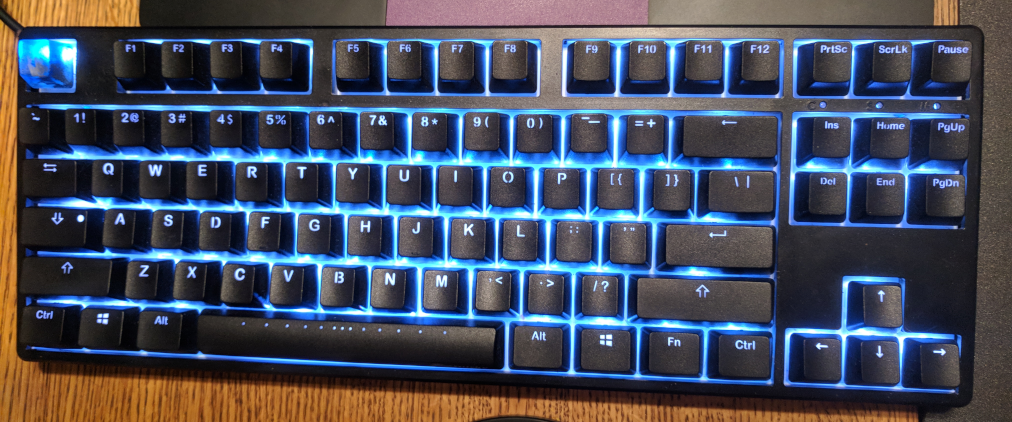

_The Corsair K70 keyboard. Very gamery._

|

||||

|

||||

|

||||

|

||||

|

||||

_The Razer BlackWidow keyboard, from a terrible panorama that is my only evidence of owning one_

|

||||

|

||||

|

||||

|

||||

|

||||

This Ducky One lasted me about a year or so, during that time I bought some (very nice) SA style keycaps (which I ended up removing relatively quickly as I didn't like the profile). At some point, however, the draw of smaller keyboards was too much, and I found myself ordering an Anne Pro off AliExpress (or one of those sites) with Gateron Brown switches (tactile, ish). Being a 60% form factor, it was a major adjustment, but I got there and it was a great relief freeing up some more room on the tiny desk I had at the time. Commuting to an office every day was also somewhat simplified, although I ended up purchasing two of the Anne Pro's successor, the Anne Pro 2. It was roughly the same keyboard, but with slightly better materials and some refinements to the software control and bluetooth interface. I ordered one with Cherry MX Blue switches and another with Kalih Box Blacks, to experience a heavier linear switch. This was also my first real exposure to alternative switch makers, and I was a massive fan of the Box Blacks. I found the brown switches were a bit too linear, and the blues were blues -- clicky, but not in a pleasant way, especially during extended use.

|

||||

|

||||

_Ducky One TKL_

|

||||

|

||||

|

||||

|

||||

|

||||

_Ducky One TKL with SA style keycaps_

|

||||

|

||||

|

||||

|

||||

|

||||

These Anne Pros lasted me several years, switching between them as I wanted and even bringing one traveling (since it was just that compact). And I still love them. But I knew it was time to upgrade. I found myself missing the navigation cluster, and wishing for a slightly more premium experience. So I started doing some research, and quickly feel down a rabbit hole.

|

||||

|

||||

_The Anne Pro collection - left to right, black with Cherry MX Blues, white with Kalih Box Blacks, Anne Pro with Gateron Browns_

|

||||

|

||||

|

||||

|

||||

|

||||

Keyboards are dangerous. The ignorant are lucky -- there is so much information out there that it is possible to become overwhelmed very quickly. Everything from the material keycaps are made of, to the acoustic properties of case materials, to the specific plastics _each part_ of a switch is made from. I had little clue what I was doing, but eventually settled on a few things; I wanted a navigation cluster, I wanted something slightly more compact than a full size keyboard, and I wanted to get premium materials.

|

||||

|

||||

|

|

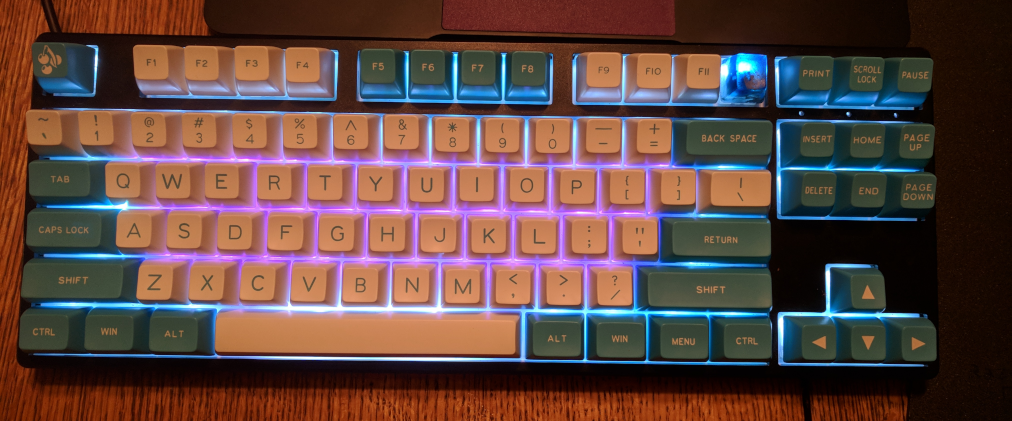

@ -49,8 +49,8 @@ And so far? Writing this post on that keyboard? I'm pretty happy with it (for th

|

|||

|

||||

_The GMMK Pro in question_

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

This is by no means my "end game" keyboard. I do plan on investing myself further in this hobby, but slowly. I already have a small list of switches to try and kits to experiment with, and have some inkling of how I'd want to custom design a keyboard. With time.

|

||||

|

|

@ -45,7 +45,7 @@ a long way from the initial versions, now supporting fancy things like GUI apps

|

|||

currently typing this in emacs running in WSL, which is still a bit weird to me).

|

||||

|

||||

#+ATTR_HTML :title emacs on Windows in WSL :alt emacs gui running from WSL

|

||||

[[file:/images/emacs-on-windows.png]]

|

||||

[[file:https://cdn.gabrielsimmer.com/images/emacs-on-windows.png]]

|

||||

|

||||

Jetbrains editors also have okay support for WSL, so it's feasible to do what little

|

||||

personal development I do these days in a mostly complete Linux environment.

|

||||

|

|

|

|||

|

|

@ -2,7 +2,7 @@

|

|||

#+date: 2023-03-06

|

||||

|

||||

#+attr_html: :alt An internet connected house (Midjourney)

|

||||

[[/images/slightly-intelligent-home.png]]

|

||||

[[https://cdn.gabrielsimmer.com/images/slightly-intelligent-home.png]]

|

||||

|

||||

/Generated with the Midjourney bot "An internet connected house"/

|

||||

|

||||

|

|

@ -11,7 +11,7 @@ I'm not overly-eager to automate my whole home. Leaving aside security concerns,

|

|||

Lights and lightbulbs are maybe the biggest no-brainer thing you could automate around your home, at least in my eyes. Automate them to wake you up, turn off when you leave the house, turn on when it gets dark in the evening, when you're away, and so on. To that end for the last few years I've had a set of hue bulbs (an older version with their hub) installed in my bedroom, upstairs office, and bird room. Over the years the app has gotten a bit clunky and slow so I ended up [[https://github.com/gmemstr/hue-webapp][making my own webapp]] for toggling lights, but I don't have any plans to upgrade the kit anytime soon. We'll talk about how I control the lights, and home, further down.

|

||||

|

||||

#+attr_html: :alt Simple hue webapp :title Hue webapp

|

||||

[[/images/hue-webapp.png]]

|

||||

[[https://cdn.gabrielsimmer.com/images/hue-webapp.png]]

|

||||

|

||||

We have a camera pointed at our bird's cage to keep an eye on her. It's a fairly straightforward Neos SmartCam/Wyzecam V2/Xiaomi Xiaofang 1S/Whatever other branding it exists under, and has been flashed with the [[https://github.com/EliasKotlyar/Xiaomi-Dafang-Hacks/][Dafang Hacks custom firmware]] for a plain RTSP stream (among other more standard protocols being exposed). This means I can open it with any player (usually VLC) without any problems. It's not the most powerful camera, streaming only 720p at the moment (24FPS, 300kbps), but considering the simple use case of checking in on our bird when we're out of the house it serves its purpose well. Not exactly "smart" but it is still part of the system.

|

||||

|

||||

|

|

@ -24,6 +24,6 @@ Controlling all of this, especially in a centalised manner, is a little tricky.

|

|||

As a sort of hidden benefit of Homebridge, I've been able to bring the thermometer metrics into my Grafana Cloud instance (more on this in the future) as the values from the plugin are printed to the log and shipped off to Loki. From there I do some regex on the logs to extract values. It's as bad as one might expect - that is to say, not horrible, but sometimes inaccurate.

|

||||

|

||||

#+ATTR_HTML: :alt Grafana dashboard for climate metrics :title Climate metrics in Grafana

|

||||

[[/images/grafana-climate.png]]

|

||||

[[https://cdn.gabrielsimmer.com/images/grafana-climate.png]]

|

||||

|

||||

From here the next step is to obtain some connected thermostatic valves for our radiators around the house. The radiator valves have ended up in slightly awkward or hard to reach places so being able to connect them up and adjust as needed (especially scheduling them for evening VR sessions) would be a huge plus. Beyond that, I'm unsure what else I would want to introduce in the home - most of the common bases are covered, especially when it comes to keeping an eye on things when we're out of the house. But who knows - keep up with me [[https://floofy.tech/@arch][on Mastodon]] and we'll see what happens next.

|

||||

|

|

|

|||

|

|

@ -9,7 +9,7 @@ The want for quickly sharing files across devices and having an easy interface f

|

|||

|

||||

Thus was born the concept of _sliproad_, originally just "pi nas" (changed for obvious reasons), was born. The initial commit was February 24th, 2019, [and really isn't much to look at](https://github.com/gmemstr/sliproad/commit/7b091438d43d77300c4be8afb64e2735dd423d71) - just reading a configuration defining a "cold" and "hot" storage location. The idea behind this stemmed from my use of two drives attached to my server at the time (a small Thinkcenter PC), one being an external hard drive and the other a much faster USB 3 SSD. For simplicity, I leveraged server-side rendered templates for file listings, and the API wasn't really of importance at this point.

|

||||

|

||||

|

||||

|

||||

|

||||

For a long while, this sufficed. It was more or less a file explorer for a remote fileysystem with two degrees of performance. But I wanted to expand on the frontend, specifically looking for more dynamic features. I began to work on decoupling functionality into an API rather than server-side templates in March of 2019, and that evolved to the implementation of "providers". I'm not entirely sure what sparked this idea, besides beginning to use Backblaze around the time of the rewrite. Previously, I ran a simple bash script to rsync my desktop's important files to the Thinkcenter's cold storage, but understood I needed offsite backups. Offloading this to Backblaze's B2 service was an option (and very worth the price) but I sacrificed ease of use when looking through backed up files. Bringing the various file providers under one roof allowed me to keep using the same interface and gave me the option of expanding the methods of interfacing with the filesystems provided. Around this time I was looking to rebrand, and taking a queue from highways chose the name "sliproad" to signify the merging of filesystems into one "road" (or API).

|

||||

|

||||

|

|

|

|||

|

|

@ -8,7 +8,7 @@ Getting to know someone's fursona can be tricky, though. Some may have at most a

|

|||

Typically when it comes to static websites, there are a plethora of options, which can lead to a bit of analysis paralysis. Some of the options for static websites include GitHub Pages, Vercel, Netlify, Cloudflare Pages, AWS S3 or Amplify, 'dumb' shared hosts, and more. In this instance, I decided to opt for an AWS S3 bucket with Cloudfront as a CDN/cache to keep costs down (Cloudfront includes 1TB of egress for free). The main advantage is that due to Amazon's dominance S3 has more or less become the standard API for interacting with object stores, so there are plenty of tools and libraries available should I want to expand on it. The disadvantage being I miss out on the fancy auto deployments and integrations that other providers offer, but given this is /just/ hosting some static files (including a static [[https://gmem.ca/.well-known/webfinger][webfinger]]) I'm not particularly worried about it.

|

||||

|

||||

#+attr_html: :alt A simple HTML page using dog onamonapias for words

|

||||

[[/images/silly-site.png]]

|

||||

[[https://cdn.gabrielsimmer.com/images/silly-site.png]]

|

||||

|

||||

/As a sidenote, thanks to the webfinger file, I now have a custom OIDC provider setup for my [[https://tailscale.com][tailnet]]! I'll probably talk about this in another post./

|

||||

|

||||

|

|

|

|||

Loading…

Reference in a new issue