Port posts over

Actual layout, parsing of publish dates and titles is TBD.

This commit is contained in:

parent

a5ff805136

commit

1ad416634d

|

|

@ -14,6 +14,4 @@

|

||||||

**/.direnv

|

**/.direnv

|

||||||

**/gs.*

|

**/gs.*

|

||||||

**/result

|

**/result

|

||||||

**/posts

|

|

||||||

!**/posts/.keep

|

|

||||||

fly.toml

|

fly.toml

|

||||||

|

|

|

||||||

2

.gitignore

vendored

2

.gitignore

vendored

|

|

@ -13,5 +13,3 @@ target/

|

||||||

.direnv/

|

.direnv/

|

||||||

gs.*

|

gs.*

|

||||||

result

|

result

|

||||||

posts/

|

|

||||||

!posts/.keep

|

|

||||||

|

|

|

||||||

52

posts/30-04-2016.md

Normal file

52

posts/30-04-2016.md

Normal file

|

|

@ -0,0 +1,52 @@

|

||||||

|

---

|

||||||

|

title: 30/04/2016

|

||||||

|

date: 2016-04-30

|

||||||

|

---

|

||||||

|

|

||||||

|

#### DD/MM/YYYY -- a monthly check-in

|

||||||

|

|

||||||

|

A lot has happened this month. From NodeMC v5 and v6 to

|

||||||

|

a new website redesign to a plethora of new project ideas, May is going

|

||||||

|

to be a much busier month, so I think I should check-in.

|

||||||

|

|

||||||

|

NodeMC, one of my most beloved projects thus far, has been going far

|

||||||

|

better than I could have ever imagined, with more than 30 unique cloners

|

||||||

|

in the past 30 days and about 300 unique visitors, those have far

|

||||||

|

outpaced my other projects. And to top it, off, 13 lovely stars. Version

|

||||||

|

1.5.0 (aka v5) [recently launched with plugin

|

||||||

|

support](https://nodemc.space/blog/3), and has been a monumental release for me in terms of

|

||||||

|

what it has taught me and what the contributors and I have achieved. A

|

||||||

|

big hats-off to [md678685](https://twitter.com/md678685) for helping with the plugin system and other fixes

|

||||||

|

within in the release. NodeMC v6, however, is going to be even bigger.

|

||||||

|

[Jared Allard](https://jaredallard.me/) of nexe fame (among other projects) has taken

|

||||||

|

interest in the project and has rewritten the bulk of it using ES6

|

||||||

|

standards, which both md678685 and I have been learning, and has

|

||||||

|

recently decided to rewrite the stock dashboard using the React

|

||||||

|

framework. I could not be happier working with him on v6.

|

||||||

|

|

||||||

|

On a smaller note, [my personal website](http://gabrielsimmer.com)

|

||||||

|

has had a bit of a facelift. I decided to do so after months of using a

|

||||||

|

free HTML5 template that really did not offer a whole lot of room to

|

||||||

|

customize. My new site allows me to add pretty much an unlimited number

|

||||||

|

of projects and other information as I see fit. It's built using my

|

||||||

|

favorite CSS framework [Skeleton](http://getskeleton.com/),

|

||||||

|

which I will forever see as superior to Bootstrap, despite not being

|

||||||

|

updated in more than a year (I may have some free time to fork it), and

|

||||||

|

using a nice font called [Elusive Icons](http://elusiveicons.com/) for

|

||||||

|

the small icons. I'm throwing the source up on GitHub as I type this

|

||||||

|

post ([it's up!](https://github.com/gmemstr/GabrielSimmer.com)).

|

||||||

|

|

||||||

|

I have a lot of projects in my head. Too many to count or even write

|

||||||

|

down. It's going to be a crazy few months as some of my more long-term

|

||||||

|

projects (some of them mine, others I'm just working on) are realized

|

||||||

|

and launched. I really don't want to say much, but I know that several

|

||||||

|

online communities, namely the Minecraft and GitHub communities, will be

|

||||||

|

very excited when I am able to talk more freely on what I have coming

|

||||||

|

up.

|

||||||

|

|

||||||

|

_*Okay, maybe I'll tease one thing -- I recently bought the YourCI.space domain, which I am not just partking!*_

|

||||||

|

|

||||||

|

Just a quick heads up as well, everyone should go check out [Software Engineering Daily](http://softwareengineeringdaily.com/). I am going to be on the show later next month, but I recommend you subscribe to that wonderful podcast regardless. I will be sure to link the specific episode when it comes out over on [my Twitter](https://twitter.com/gmemstr)

|

||||||

|

|

||||||

|

> "If you have good thoughts they will shine out of your face like

|

||||||

|

> sunbeams and you will always look lovely." -- Roald Dahl

|

||||||

0

posts/_index.md

Normal file

0

posts/_index.md

Normal file

36

posts/a-bit-about-batch.md

Normal file

36

posts/a-bit-about-batch.md

Normal file

|

|

@ -0,0 +1,36 @@

|

||||||

|

---

|

||||||

|

title: A bit about batch

|

||||||

|

date: 2016-01-21

|

||||||

|

---

|

||||||

|

|

||||||

|

#### Or: why there is no NodeMC installer

|

||||||

|

|

||||||

|

Can we just agree on something -- Batch scripting is

|

||||||

|

terrible and should go die in a fiery pit where all the other Windows

|

||||||

|

software goes? I don't think I can name any useful features of batch

|

||||||

|

scripting besides text-based (and frankly pointless) DOS-like games. It

|

||||||

|

sucks.

|

||||||

|

|

||||||

|

I recently tried to port the NodeMC install script from Linux (bash) to

|

||||||

|

Windows (batch), and while it seemed possible, the simple tasks of

|

||||||

|

downloading a file, unzipping it, and moving some others around, it

|

||||||

|

proved to be utterly impossible.

|

||||||

|

|

||||||

|

_A quick note before I proceed -- Yes, I realize something like a custom built installer in a more traditional language would have been possible, however I wanted to see what I could do without it. Also I'm lazy._

|

||||||

|

|

||||||

|

First and foremost, there is no native way to download files properly.

|

||||||

|

Most Linux distros ship with cURL or wget (or are installed fairly early

|

||||||

|

on), which are both great options for downloading and saving files from

|

||||||

|

the internet. On Windows, it is suggested

|

||||||

|

[BITS](https://en.wikipedia.org/wiki/Background_Intelligent_Transfer_Service) could do the job. However, on execution, it simply

|

||||||

|

*does not work*. I got complaints from Windows about how it didn't want

|

||||||

|

to do the thing. *Fine*. Let's move on to the other infuriating thing.

|

||||||

|

|

||||||

|

Stock Windows has the ability to unzip files just file. So why the hell

|

||||||

|

can I not call that from batch? There is no reason I shouldn't be able

|

||||||

|

to. But alas, it cannot be done, [at least not

|

||||||

|

easily](https://stackoverflow.com/questions/21704041/creating-batch-script-to-unzip-a-file-without-additional-zip-tools) \*grumble grumble\*

|

||||||

|

|

||||||

|

In conclusion: Batch is useless. It should be eradicated and replaced

|

||||||

|

with something useful. Because as of now it has very little if any

|

||||||

|

redeeming qualities that make me want to use it.

|

||||||

33

posts/a-post-a-day.md

Normal file

33

posts/a-post-a-day.md

Normal file

|

|

@ -0,0 +1,33 @@

|

||||||

|

---

|

||||||

|

title: A Post A Day...

|

||||||

|

date: 2015-10-28

|

||||||

|

---

|

||||||

|

|

||||||

|

#### Keeps the psychiatrist away.

|

||||||

|

|

||||||

|

I'm trying to keep up with my streak of a post every day on Medium,

|

||||||

|

mostly because I've found it really fun to write. I think Wednesdays

|

||||||

|

will become a sort of 'weekly check-in', going over what I have done and

|

||||||

|

what there is yet to do.

|

||||||

|

|

||||||

|

So, what have I accomplished this week?

|

||||||

|

|

||||||

|

- Created a link shortner.

|

||||||

|

- Made the staff page for Creator Studios.

|

||||||

|

- Started updating [my homepage](http://gabrielsimmer.com)

|

||||||

|

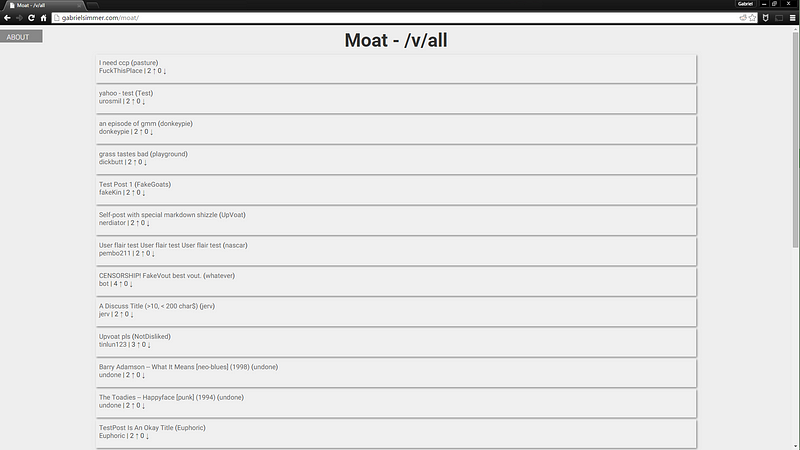

- Fixed an issue with Moat-JS (pushing out soon).

|

||||||

|

- Removed mc.gabrielsimmer.com and all the deprecated projects.

|

||||||

|

- Moved to Atom for development.

|

||||||

|

|

||||||

|

And what do I hope to accomplish?

|

||||||

|

|

||||||

|

- Fix my link shortner.

|

||||||

|

- Work on the video submission page for Creator Studios.

|

||||||

|

- Whatever else needs doing.

|

||||||

|

- Complete my week-long streak of Medium posts.

|

||||||

|

|

||||||

|

It's hard to list what I want to accomplish because things just come up

|

||||||

|

that I have no control over. But nonetheless, I will report back next

|

||||||

|

Wednesday.

|

||||||

|

|

||||||

|

Happy coding!

|

||||||

29

posts/ajax-is-cool.md

Normal file

29

posts/ajax-is-cool.md

Normal file

|

|

@ -0,0 +1,29 @@

|

||||||

|

---

|

||||||

|

title: AJAX Is Cool

|

||||||

|

date: 2015-11-07

|

||||||

|

---

|

||||||

|

|

||||||

|

#### Loading content has never been smoother

|

||||||

|

|

||||||

|

I started web development (proper web development anyways) using pretty much only PHP and HTML -- I didn't touch JavaScript at all, and CSS was usually handled by Bootstrap. I always thought I was doing it wrong, but I usually concluded the same thing every time.

|

||||||

|

|

||||||

|

> "JavaScript is too complex. I'll stick with PHP."

|

||||||

|

|

||||||

|

Only recently have I been getting into JavaScript, and surprisingly have

|

||||||

|

been enjoying it. I've been using mainly AJAX and jQuery for GET/POST

|

||||||

|

requests for loading and displaying content, which I do realize is *just

|

||||||

|

barely* scratching the surface of what it can do, but it's super useful

|

||||||

|

because I can do things such as displaying a loading animation while it

|

||||||

|

fetches the data or provide nearly on-the-fly updating of a page's

|

||||||

|

content (I am working on updating ServMineUp to incorporate AJAX for

|

||||||

|

this reason). I'm absolutely loving my time with it, and I hope I'll be

|

||||||

|

able to post more little snippets of code like I did for

|

||||||

|

[HoverZoom.js](/posts/hoverzoom-js). Meanwhile, I encourage everyone to try their hand at AJAX & JavaScript. It's powerful and amazing.

|

||||||

|

|

||||||

|

#### Useful Resources

|

||||||

|

|

||||||

|

[jQuery API](http://api.jquery.com/)

|

||||||

|

|

||||||

|

[JavaScript on Codecademy](https://www.codecademy.com/learn/javascript)

|

||||||

|

|

||||||

|

[JavaScript docs on MDN](https://developer.mozilla.org/en-US/docs/Web/JavaScript)

|

||||||

39

posts/an-api-a-day.md

Normal file

39

posts/an-api-a-day.md

Normal file

|

|

@ -0,0 +1,39 @@

|

||||||

|

---

|

||||||

|

title: An API A Day

|

||||||

|

date: 2016-07-11

|

||||||

|

---

|

||||||

|

|

||||||

|

#### Keeps... other productivity away?

|

||||||

|

|

||||||

|

[Update: GitHub org is available here with more info & rules.](https://github.com/apiaday)

|

||||||

|

|

||||||

|

I've been in a bit of a slump lately, NodeMC hasn't

|

||||||

|

been as inspiring and development has been a bit slow, other projects

|

||||||

|

are on hold as I wait for third parties, so I haven't really been

|

||||||

|

working on much development wise.

|

||||||

|

|

||||||

|

#### So I can up with a challenge: **An API A Day**.

|

||||||

|

|

||||||

|

The premise is simple -- Every day, I pick a new API to build an

|

||||||

|

application with from [this list](https://www.reddit.com/r/webdev/comments/3wrswc/what_are_some_fun_apis_to_play_with/) (from reddit). From the time I start I have the rest

|

||||||

|

of the day to build a fully-functional prototype, bugs allowed but core

|

||||||

|

functionality must be there. And it can't just be a "display all the

|

||||||

|

data" type app, it has to be interactive in some form.

|

||||||

|

|

||||||

|

An example of this is [Artdio](http://gmem.pw/artdio), which was the inspiration for this challenge. I

|

||||||

|

built the page in about 3 hours using SoundCloud's JavaScript API

|

||||||

|

wrapper, just as a little "how does this work" sort of challenge.

|

||||||

|

|

||||||

|

So how is this going to be organised?

|

||||||

|

|

||||||

|

I'm going to create a GitHub organization that will house the different

|

||||||

|

challenges as separate repositories. To contribute, all you'll need to

|

||||||

|

do is fork the specific day / API, copy your project into it's own

|

||||||

|

folder (named YOURPROJECTNAME-YOURUSERNAME), then create a pull request

|

||||||

|

to the main repository. I don't know the specific order I personally

|

||||||

|

will be going through these APIs, so chances are I will bulk-create

|

||||||

|

repositories so you can jump around at your own pace, or you can request

|

||||||

|

a specific repository be created.

|

||||||

|

|

||||||

|

If you have any questions or need something cleared up, feel free to

|

||||||

|

[tweet at me :)](https://twitter.com/gmem_)

|

||||||

120

posts/building-a-large-scale-server-monitor.md

Normal file

120

posts/building-a-large-scale-server-monitor.md

Normal file

|

|

@ -0,0 +1,120 @@

|

||||||

|

---

|

||||||

|

title: Building a Large-Scale Server Monitor

|

||||||

|

date: 2017-01-24

|

||||||

|

---

|

||||||

|

|

||||||

|

#### Some insight into the development of [Platypus](https://github.com/ggservers/platypus)

|

||||||

|

|

||||||

|

If you've been around for a while, you may be aware I

|

||||||

|

work at [GGServers](https://ggservers.com) as a developer primarily focused on exploring new

|

||||||

|

areas of technology and computing. My most recent project has been

|

||||||

|

Platypus, a replacement to our very old status page

|

||||||

|

([here](https://status.ggservers.com/), yes we know it's down). Essentially, I had three

|

||||||

|

goals I needed to fulfil.

|

||||||

|

|

||||||

|

1. [Able to check whether a panel (what we refer to our servers as, for

|

||||||

|

they host the Multicraft panel) within our large network is offline.

|

||||||

|

This is by far the easiest part of the project, however

|

||||||

|

implementation and accuracy was a problem.]

|

||||||

|

2. [Be able to fetch server usage statistics from a custom script which

|

||||||

|

can be displayed on a webpage so we can accurately monitor which

|

||||||

|

servers are under or over utilised.]

|

||||||

|

3. [Build a Slack bot to post updates of downed panels into our panel

|

||||||

|

reporting channel.]

|

||||||

|

|

||||||

|

#### Some Rationale

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

*Why did you choose Python? Why not Node.js or even PHP (like our

|

||||||

|

current status page)?* Well, I wanted to learn Python, because it's a

|

||||||

|

language I never fully appreciated until I built tfbots.trade (which is

|

||||||

|

broken, I know, I haven't gotten around to fixing it). At that point, I

|

||||||

|

sort of fell in love with the language, the wonderful syntax and PEP8

|

||||||

|

formatting. Regardless of whether I loved it or not, it is also a hugely

|

||||||

|

important language in the world of development, so it's worth learning.

|

||||||

|

|

||||||

|

*Why do you use JSON for all the data?* I like JSON. It's easy to work

|

||||||

|

with, with solid standards and is very human readable.

|

||||||

|

|

||||||

|

#### Tackling Panel Scanning

|

||||||

|

|

||||||

|

[Full video](https://youtu.be/xAXT1mOFccM)

|

||||||

|

|

||||||

|

Right so the most logical way to see if a panel is down is to make a

|

||||||

|

request and see if it responds. So that's what I did. However there were

|

||||||

|

a few gotchas along the way.

|

||||||

|

|

||||||

|

First, sometimes our panels aren't actually **down**, but just take a

|

||||||

|

little bit to respond because of various things like CPU load, RAM

|

||||||

|

usage, etc., so I needed to determine a timeout value so that scanning

|

||||||

|

doesn't take too long (CloudFlare adds some latency between a client and

|

||||||

|

the actual "can't reach server" message). Originally, I had this set to

|

||||||

|

one second, thinking that even though my own internet isn't fast enough,

|

||||||

|

the VPS I deployed it to should have a fast enough network to reach

|

||||||

|

them. This turned out to not be true -- I eventually settled on 5

|

||||||

|

seconds, which is ample time for most panels to respond.

|

||||||

|

|

||||||

|

Originally I believed that just fetching the first page of the panel (in

|

||||||

|

our case, the login for Multicraft), would be effective enough.

|

||||||

|

Unfortunately what I did not consider is all the legwork the panel

|

||||||

|

itself has to do to render out that view (Multicraft is largely

|

||||||

|

PHP-based). But fortunately, the request doesn't really care about the

|

||||||

|

result it gets back (*yet*). So to make it easier, I told the script to

|

||||||

|

get whatever is in the /platy/ route. This of course makes it easier for

|

||||||

|

deployment of the stat scripts, but I'll get to those in a bit.

|

||||||

|

|

||||||

|

Caching the results of this scan is taken care of by my useful JSON

|

||||||

|

caching Python module, which I haven't forked off because I don't feel

|

||||||

|

it's very fleshed out. That said, I've used it in two of my handful of

|

||||||

|

Python projects (tfbots and Platypus) and it has come in very handy

|

||||||

|

([here's a gist of it](https://gist.github.com/gmemstr/78d7525b1397c35b7db6cfa858f766c0)). It handles writing and reading cache data with no

|

||||||

|

outside modules aside from those shipped with Python.

|

||||||

|

|

||||||

|

#### Stat Scripts

|

||||||

|

|

||||||

|

An integral part of a status page within a Minecraft hosting company is

|

||||||

|

being able to see the usage stats from our panels. I wrote two scripts

|

||||||

|

to help with this, one in Python and one in PHP, which both return the

|

||||||

|

same data. It wasn't completely necessary to write two versions, but I

|

||||||

|

was not sure which one would be favoured for deployment, and I figured

|

||||||

|

PHP was a safe bet because already we have PHP installed on our panels.

|

||||||

|

The Python script was a backup, or if others wanted to use Platypus but

|

||||||

|

without the kerfuffle of PHP.

|

||||||

|

|

||||||

|

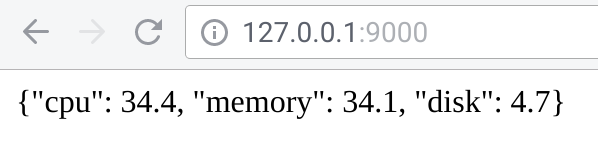

The script(s) monitor three important usage statistics; CPU, RAM and

|

||||||

|

disk space. It returns this info as a JSON array, with no extra frills.

|

||||||

|

The Python script implements a minimal HTTP server to handle requests as

|

||||||

|

well, and only relies on the psutil module for getting stats.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

#### Perry the Platypus

|

||||||

|

|

||||||

|

Aka the Slack bot, which we have affectionately nicknamed. This was the

|

||||||

|

most simple part of the project to implement thanks to the

|

||||||

|

straightforward library Slack has for Python. Every hour, he/she/it

|

||||||

|

(gender undecided, let's not force gender roles people! /s) posts to our

|

||||||

|

panel report channel with a list of the downed panels. This is the part

|

||||||

|

most subject to change as well, because after a while it feel a lot like

|

||||||

|

a very annoying poke in the face every hour.

|

||||||

|

|

||||||

|

#### →Going Forward →

|

||||||

|

|

||||||

|

I am to continue to work on Platypus for a while; I am currently

|

||||||

|

implementing multiprocessing so that when it scans for downed panels,

|

||||||

|

the web server can still respond. I am having some funky issues with

|

||||||

|

that though, namely the fact Flask seems to be blocking the execution of

|

||||||

|

functions once it is started. I'm sure there's a fix, I just haven't

|

||||||

|

found it yet. I also want to make the frontend more functional -- I am

|

||||||

|

definitely implementing refreshing stats with as little JavaScript as

|

||||||

|

possible, and maybe making it slightly more compact and readable. As for

|

||||||

|

the backend, I feel like it's pretty much where it needs to be, although

|

||||||

|

it could be a touch faster.

|

||||||

|

|

||||||

|

Refactoring the code is also on my to do list, but that is for much,

|

||||||

|

much farther down the line.

|

||||||

|

|

||||||

|

I also need an adorable logo for the project.

|

||||||

|

|

||||||

|

|

||||||

64

posts/chromium-foundation.org

Normal file

64

posts/chromium-foundation.org

Normal file

|

|

@ -0,0 +1,64 @@

|

||||||

|

#+title: Chromium Foundation

|

||||||

|

#+date: 2021-12-03

|

||||||

|

|

||||||

|

*** We need to divorce Chromium from Google

|

||||||

|

|

||||||

|

The world of browsers is pretty bleak. We essentially have one viable player, Chromium,

|

||||||

|

flanked by the smaller players of Firefox, spiraling in a slow painful self destruction,

|

||||||

|

and Safari, a browser well optimized for Apple products.

|

||||||

|

|

||||||

|

/note: We're specifically discussing browsers, but you can roughly equate the arguments I make later with the browser's respective engines./

|

||||||

|

|

||||||

|

The current state of browsers is a difficult one. Chromium has the backing of notable

|

||||||

|

megacorps and is under the stewardship of Google, which come with a number of perks

|

||||||

|

(great featureset, performance, talented minds working on a singular project, etc)

|

||||||

|

and a number of downsides as well (Google has a vested interested in advertisements, for example).

|

||||||

|

Firefox is off in the corner with a declining marketshare as it alienates its users

|

||||||

|

in a flailing attempt to gain users from the Chromium market, including dumping XUL addons

|

||||||

|

in favour of WebExtensions, some rather uneccesary UI refreshes (subjective, I suppose),

|

||||||

|

and various other unsavoury moves that leave a bad taste in everyone's mouth. And Safari

|

||||||

|

is in the other corner, resisting the web-first movement as applications move to web technologies

|

||||||

|

and APIs in the name of +control+ privacy and effeciency. While I don't think Safari neccesarily

|

||||||

|

holds back the web, I think it could make a more concerted effort to steer it.

|

||||||

|

|

||||||

|

With all that said, it's easy to come to the conclusion that the web has a monoculture browser

|

||||||

|

problem; over time, Chromium will emerge the obvious victor. And that's not great, but not because

|

||||||

|

there would be only one browser engine.

|

||||||

|

|

||||||

|

The web in 2021 is a complex place - due to a number of factors it's no longer simple documents located on

|

||||||

|

webservers, but we now have what are ostensibly desktop applications loaded in a nested operating system. For better or worse,

|

||||||

|

this is where we've ended up, and that brings a /lot/ of hard problems to solve for a new browser. This is

|

||||||

|

why I believe there really hasn't been any new mainstream (key word!) browser engines - the web is simply

|

||||||

|

too complex. The browser pushing this "forward" (somewhat subjective) is Chromium, but Chromium is controlled

|

||||||

|

by Google. While there are individuals and other corporations contributing code, Google still controls Chromium, and

|

||||||

|

this makes a fair few people uneasy given Google's primary revenue source - ads, and in turn tracking. Logically,

|

||||||

|

we want a more diverse set of browsers to choose from, free of Google's influence! Forget V8/Blink, we need

|

||||||

|

independant engines! Full backing for Gecko and WebKit! Well, yes, but actually, no. We need to throw

|

||||||

|

effort behind one engine free of Google's clutches, but it should be Chromium/V8/Blink.

|

||||||

|

|

||||||

|

Hear me out (credit to @Slackwise for planting the seed of this in my head) - we should really opt to tear the most successful

|

||||||

|

engine from Google's clutches and spin it off into its own entity. One with a nonprofit structure similar

|

||||||

|

to how Linux manages itself (a good example of a large scale effort in a similar vein). The web is simply

|

||||||

|

too complex at this point for new engines to thrive (see: Servo), and the other two options, Gecko from Mozilla/Firefox

|

||||||

|

and WebKit from Safari/Apple, are having a really hard time evolving and playing catch up. With a foundation dedicated to the

|

||||||

|

engine, and a licensing or sponsorship model built out, I genuinely believe that it would be better

|

||||||

|

in the long run for the health and trust of the internet. We can still have Chromium derivatives, with

|

||||||

|

their unique takes or spins, so it would not reduce the choice available (besides, people choose based on features, not engine).

|

||||||

|

Concentrating effort into a single browser engine rather than fragmenting the effort across a handful might allow

|

||||||

|

for some really great changes to the core of the engine, whether it be performance, better APIs, more privacy

|

||||||

|

respecting approaches, and so on. It also finally eliminates the problem of cross browser incompatibilities.

|

||||||

|

|

||||||

|

Would it stagnate? Maybe. It's entirely possible this is a terrible idea that would stiffle innovation. But

|

||||||

|

given the success and evolution of other projects with a matching scale (Linux), and the constant demands

|

||||||

|

for new "things" for the web, I feel confident that we could maintain a healthy internet with a single engine.

|

||||||

|

And remember, it's okay for something to be "done". Constantly shipping new features isn't neccesarily a plus -

|

||||||

|

while we see new features shipping as a sign of activity and life, it's perfectly fine for us to take a step back

|

||||||

|

and work on bugs and speed improvements. And if something isn't satisfactory, I'm pretty confident that the

|

||||||

|

project could be properly forked with improvements or changes made later upstreamed, in the spirit of open

|

||||||

|

source and collaboration.

|

||||||

|

|

||||||

|

There are calls for breaking up the large technology companies, but I don't really want to delve much

|

||||||

|

into that here, or even consider this a call to action. Instead, I want this to serve as mild musings and

|

||||||

|

hopefully get the seed of an idea out there, an idea discussed a few times in a private Discord guild. I don't

|

||||||

|

expect this to ever become a reality without some strongarming from some government body, but I hold out some

|

||||||

|

hope.

|

||||||

76

posts/couchdb-as-a-backend.md

Normal file

76

posts/couchdb-as-a-backend.md

Normal file

|

|

@ -0,0 +1,76 @@

|

||||||

|

---

|

||||||

|

title: Using CouchDB as a Website Backend

|

||||||

|

date: 2022-09-25

|

||||||

|

---

|

||||||

|

|

||||||

|

**She's a Coucher!**

|

||||||

|

|

||||||

|

<iframe width="560" height="315" src="https://www.youtube-nocookie.com/embed/m1eooqIyjbM" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

|

||||||

|

|

||||||

|

Not too long ago, I was shown [CouchDB](https://couchdb.apache.org/), a wonderous database promising to let me relax. It was presented

|

||||||

|

as a database that would be able to effectively act as a standalone app backend, without any need for a custom backend for simple applications.

|

||||||

|

At the time, I didn't think I had any use for a document store (or "NoSQL database"), nor did I want to remove any of my existing custom

|

||||||

|

backends - I spent so long writing them! Deploying them! But then, as I started to think about what would need to go into adding a new

|

||||||

|

type of commission my girlfriend wanted to accept to her website, including uploading files, managing the schema etc. I realised that actually,

|

||||||

|

her website is pretty well served by a document database.

|

||||||

|

|

||||||

|

Allow me to justify; the content [on their website](https://artbybecki.com) is completely standalone - there's no need for relations, and I want

|

||||||

|

to allow flexibility to add whatever content or commission types that she wants without me needing to update a bunch of Go code and deploy it

|

||||||

|

(as much as I may love Fly.io at the moment), while also performing any migrations to the PostgreSQL database.

|

||||||

|

So with that in mind, I started to write a proof of concept, moving the database of the existing

|

||||||

|

Go backend to use CouchDB instead. This was surprisingly easy - CouchDB communicates over HTTP, returning JSON, so I just needed to use the stdlib

|

||||||

|

HTTP client Go provides. But I found that the more I wrote, the more I was just layering a thin proxy over CouchDB that didn't need to exist!

|

||||||

|

Granted, this thin proxy did do several nice things, like conveniently shortcutting views, or providing a simple token-based authentication for

|

||||||

|

editing entries. But at the end of the day, I was just passing CouchDB JSON directly through the API, and realised I could probably scrap it

|

||||||

|

all together. Not only is it one less API to maintain and update, but I get to play around with a new concept - directly querying and modifying

|

||||||

|

a database from the frontend of the website! Something typically labelled as unwanted, but given CouchDB's method of communication I was willing

|

||||||

|

to give it a shot.

|

||||||

|

|

||||||

|

Thus, I `rm -rf backend/`'d and got to work, getting a handle of how CouchDB works. The transition was really easy - the data I wanted was still

|

||||||

|

being returned in a format I could easily handle, and after writing some simple views I got a prototype put together.

|

||||||

|

|

||||||

|

|

||||||

|

_views are just JS!_

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

(this does still mean there's a bit of manual legwork I have to do when she wants to add a new type, but I'd have to tweak the frontend anyways)

|

||||||

|

|

||||||

|

The tricky part came when it was time to move the admin interface to use the CouchDB API. I wanted to use CouchDB's native auth, of course, and

|

||||||

|

ideally the cookies that it provides on one of its authentication endpoints. The best I could come up with, for the moment, is storing the username

|

||||||

|

and password as a base64 encoded string and sending it along as basic HTTP authentication, for the time being. These are only stored in-memory, so while

|

||||||

|

I do feel a shred of guilt storing the username and password in essentially plaintext, it's at least difficult to get to - and the application is only

|

||||||

|

used by me and my partner, so the radius is relatively small.

|

||||||

|

|

||||||

|

One minor note, on this topic, on permissions. CouchDB doesn't have a granular permission system, and is sort of an all-or-nothing thing - either your

|

||||||

|

database is open for everyone to read and write, or just one group/user. Thankfully, you can use a design document with a validation function to restrict

|

||||||

|

the modification of the database to users or groups, but it's a little annoying that it's not technically native, but it does seem to be working just fine

|

||||||

|

so until something breaks it seems like the best approach.

|

||||||

|

|

||||||

|

There was also the question of where to store images - the custom API I wrote uploaded

|

||||||

|

images to Backblaze B2, which is proxied through Cloudflare and processed with Cloudinary's free offering for optimising images. Thankfully, the answer

|

||||||

|

is "just shove it into CouchDB!". CouchDB natively understands attachments for documents, so I don't have to do any funky base64 encoding into the

|

||||||

|

document itself. It's hooked up to Cloudinary as a data source, so images are cached and processed on their CDN - the B2/Cloudflare approach was

|

||||||

|

okay, if a little slow, but using CouchDB for this was _really_ slow, so this caching is pretty much mandatory. Also on the caching front, I opted

|

||||||

|

to put an AWS Cloudfront distribution in front of the database itself to cache the view data. While this slows down updates, it also lessens the

|

||||||

|

load on the database (currently running on a small Hetzner VPS) and speeds up fetching the data.

|

||||||

|

|

||||||

|

_side note: Given CouchDB's replication features, and my want to have a mostly globally distributed CDN for the data, I'm considering looking into

|

||||||

|

using CouchDB on Fly.io and replicating the database(s) between regions! Follow me on [Twitter](https://twitter.com/gmem_) or [Mastodon](https://tech.lgbt/@arch)

|

||||||

|

for updates._

|

||||||

|

|

||||||

|

Migration from the previous API and the development environment was a breeze as well - I wrote a simple Python script that just pulls the API

|

||||||

|

and inserts the objects in the response into their own documents, then uploading the images associated with the object to the database itself.

|

||||||

|

The entire process is pretty quick, and only has to be done once more when I finally merge the frontend changes point to the database's API.

|

||||||

|

|

||||||

|

Using a database directly as an API for a CRUD interface is a very odd feeling, and even more odd exposing it directly to end users of a website.

|

||||||

|

But all things considered, it works really well and I'm excited to keep exploring what CouchDB can offer. I don't know if I have enough of an

|

||||||

|

understanding of the database to recommend it as a backend replacement for simple applications, but I _do_ recommend considering it for simple

|

||||||

|

APIs, and shipping with a web GUI for management (Fauxton)is incredibly helpful for experimenting. My stance on "SQL all the things!" has shifted

|

||||||

|

substantially and I recognise that 1) traditional SQL databases are actually a _really_ clunky way of handling webapp data and 2) it's fine to not

|

||||||

|

have relational data.

|

||||||

|

|

||||||

|

I'm going to be exploring the database much more, with CouchDB and PouchDB, [SurrealDB](https://surrealdb.com/), and continuing keep an eye on

|

||||||

|

SQLite thanks to [LiteFS](https://github.com/superfly/litefs) and [Litestream](https://litestream.io/) piquing my, and the rest of the internet's,

|

||||||

|

interest. I also want to invest a little bit of time into time series databases like Prometheus, InfluxDB or QuestDB, although those are a little

|

||||||

|

lower on my priority list.

|

||||||

86

posts/creating-an-artists-website.org

Normal file

86

posts/creating-an-artists-website.org

Normal file

|

|

@ -0,0 +1,86 @@

|

||||||

|

#+title: Creating an Artist's Website

|

||||||

|

#+date: 2022-05-14

|

||||||

|

|

||||||

|

*** So my girlfriend is doing comissions...

|

||||||

|

|

||||||

|

If you're coming to this post expecting some magical journey into the design

|

||||||

|

and implementation of some fancy abstract website, I'm sorry to disappoint -

|

||||||

|

this will instead be a relatively technical post about building out a /very/

|

||||||

|

simple web frontend for both displaying comission information, building out an API

|

||||||

|

(or more specifically, a headless content management system) for managing a few

|

||||||

|

specific bits of content on said site (including images and text. Yay!), and the

|

||||||

|

trials and tribulations of deploying said API, which includes a rewrite from

|

||||||

|

TypeScript to Go.

|

||||||

|

|

||||||

|

A little more background, to clarify who the artist in question is and their use case.

|

||||||

|

My girlfriend has recently been taking on some art comissions, specifically around

|

||||||

|

texturing avatars for VRChat. To make life easier, I decided to create a website

|

||||||

|

for her that she could direct potential clients to, allowing them to have a look at

|

||||||

|

the work she's completed, pricing estimates, and contact information. It began life as

|

||||||

|

a simply gallery of photos, but didn't quite fit the above goals, and thus a plan was

|

||||||

|

born (after consultation with the client) - custom made information sheets for each

|

||||||

|

character, paired with a little blurb about what was done to reach the end product.

|

||||||

|

The goal of having it be editable through an interface rather than manually editing

|

||||||

|

HTML was forefront in my mind, so early into this design I opted to store the

|

||||||

|

information as a static object in the JavaScript, intending to swap it out later.

|

||||||

|

Frontend isn't really my speciality, so we'll say that it was relatively

|

||||||

|

straightforward to put together and move on to the exciting piece - the API.

|

||||||

|

|

||||||

|

My initial reaction was to leverage CloudFlare Page's /functions/ feature, which

|

||||||

|

allows the creation of "serverless" functions alongside the static website (they

|

||||||

|

also offer dedicated "Workers", but these appear to be intended as standalone

|

||||||

|

endpoints rather than being developed in tandem with the site). Storing the comission

|

||||||

|

sheets and the associated data was easy with the K/V store offered to the functions,

|

||||||

|

but I began to encounter issues as soon as files got involved. While the runtime the

|

||||||

|

functions are contained in /seems/ complete, it's a bit deceptive - in this instance,

|

||||||

|

I found that the =File= / =Blob= API simply didn't exist, which put a big block in

|

||||||

|

front of my plan to more or less proxy the image over to the GitHub repository,

|

||||||

|

allowing me to store the images for free and very easily link and load them.

|

||||||

|

Unfortunately, GitHub's API requires the contents of the files to be base64 encoded,

|

||||||

|

and the limitations of the function's runtime environment made this difficult. I did

|

||||||

|

manage to get the files to upload, but it would transform into a text file rather

|

||||||

|

than the PNG it should be.

|

||||||

|

|

||||||

|

After wrestling with this problem for a day, attempting various changes, I decided

|

||||||

|

to throw the whole function idea into the bin and look at a traditional long-running

|

||||||

|

server process, and ditched the idea of storing files in GitHub as it would only lead

|

||||||

|

to frustrations when trying to do development and push changes, opting instead for

|

||||||

|

Backblaze's B2 offering (mostly because I'd used it before and the pricing was

|

||||||

|

reasonable, paired with the free bandwidth as it goes through CloudFlare). Not wanting

|

||||||

|

to pass up and opportunity to pick up at least one new technology, I opted to leverage

|

||||||

|

[[https://fly.io][Fly.io]]'s free plan. My initial impression of Fly.io was limited, having only read

|

||||||

|

a handful of their blog posts, but upon further inspection (and I'm still standing

|

||||||

|

by this after using the product for a while) it felt more like an evolved, but less

|

||||||

|

mature, Heroku, offering a very similar fashion of deployment but with some added

|

||||||

|

niceties like persistant volumes and free custom domains.

|

||||||

|

|

||||||

|

The first prototype leveraged SQLite with a persistant volume, since I didn't expect to

|

||||||

|

need anythinig more complex - a flat file structure would have been fine, but where's the

|

||||||

|

fun in that? And this actually worked fine, but I quickly found out that during deploys,

|

||||||

|

the API would be unavailable as it updated to the latest version, and I figured the best

|

||||||

|

way to resolve this would be to scale up to 2 instances of the app, so there would always

|

||||||

|

be one instance available as the update rolled out. Ah! The keen eyed reader may say.

|

||||||

|

"How will you replicate the SQLite database?" This... was a problem I had not considered,

|

||||||

|

and thus went looking for answers to avoid spinning up a hosted database. With Fly.io

|

||||||

|

having just aquired a company that builds a product specifically for this purpose, I

|

||||||

|

figure this feature may be coming in the future, but after a little digging I decided to

|

||||||

|

opt for a PostgreSQL database running on Fly.io. Blissfully easy to set up, with two

|

||||||

|

commands required to create the database then create a connection string for the app

|

||||||

|

itself, injected as an environment variable. After some manual migration (there were

|

||||||

|

only a few records to migrate, so better sooner than later) and a deployment to swap over

|

||||||

|

to the postgres datbase in the Go app, we were off to the races! Deployments now don't

|

||||||

|

take the API offline, and I can scale up as I need without worrying about replicating the

|

||||||

|

SQLite datbase. Success!

|

||||||

|

|

||||||

|

/sidenote: this codebase is unlike to be open sourced anytime soon because it's... real messy. but keep an eye on my [[https://tech.lgbt/@arch][Mastodon]]/

|

||||||

|

|

||||||

|

I know I've glossed over the file storage with Backblaze B2 a bit, but it's not really

|

||||||

|

anything to note as exciting. The setup with CloudFlare was largely a [[https://www.backblaze.com/blog/free-image-hosting-with-cloudflare-transform-rules-and-backblaze-b2/][standard affair]] with

|

||||||

|

some DNS entries and URL rules, and leveraging the S3 API and Go libraries made it a

|

||||||

|

breeze to setup in the API itself. It's "fast enough", with caching, and the peering

|

||||||

|

agreements between CloudFlare and Backblaze mean I only pay for the storage, which is much

|

||||||

|

less than it would cost to use some other S3-compatible provider (say, AWS itself).

|

||||||

|

|

||||||

|

My current task is getting the admin panel up to snuff, but it's very functional at the

|

||||||

|

moment and easy enough for my girlfriend to use to update the content of the site, so

|

||||||

|

at this point I'm satisfied with the [[https://artbybecki.com][current result]]. I now await further instructions.

|

||||||

89

posts/current-infrastructure-2022.org

Normal file

89

posts/current-infrastructure-2022.org

Normal file

|

|

@ -0,0 +1,89 @@

|

||||||

|

#+title: Current Infrastructure (2022)

|

||||||

|

#+date: 2022-07-11

|

||||||

|

|

||||||

|

*** Keep it interesting

|

||||||

|

|

||||||

|

My personal infrastructure has evolved quite significantly over the years, from

|

||||||

|

a single Raspberry Pi 1, to a Raspberry Pi 2 and ThinkCenter mini PC, to my

|

||||||

|

current setup consisting of two Raspberry Pis, a few cloud servers, and a NAS that

|

||||||

|

is currently being put together.

|

||||||

|

|

||||||

|

At the heart of my infrastructure is my [[https://tailscale.com/kb/1136/tailnet/][tailnet]]. All machines, server, desktop, mobile, whatever,

|

||||||

|

get added to the network, mostly for ease of access. One of my Pis at home serves as an exit

|

||||||

|

node, exposing my home's subnet (sometimes called dot-fifty because of the default subnet the

|

||||||

|

router creating) so I can access the few devices that I can't install Tailscale on. The

|

||||||

|

simplicity of adding new devices to the network has proved very useful, and has encouraged me to

|

||||||

|

adopt this everything-on-one-network approach.

|

||||||

|

|

||||||

|

The servers on the network run a few key pieces of infrastructure. At home, the same Pi that

|

||||||

|

serves as the Tailscale exit node also runs [[https://k3s.io/][k3s]] to coordinate the hosting of my Vaultwarden,

|

||||||

|

container registry, and [[github.com/gmemstr/hue-webapp][hue webapp]] applications. This same Pi also serves Pihole, which has yet

|

||||||

|

to be moved into k3s (but it will be soon). While k3s is a fairly optimised distribution of

|

||||||

|

Kubernetes, it does beg the question "why deploy it? why not just run docker, or docker compose,

|

||||||

|

or whatever else?". The simple answer is "I wanted to". The other simple answer is that it is

|

||||||

|

an excellent learning exercise. I deal with Kubernetes on a fairly regular basis both at my

|

||||||

|

day job and at [[https://furality.org][Furality]] (I'll be writing a dedicated post delving into the tech powering that),

|

||||||

|

so having one or two personal deployments doesn't hurt for experimentation and learning. Plus,

|

||||||

|

it's actually simplified my workflow for deploying applications to self host, and forced me to

|

||||||

|

setup proper CI/CD workflows to push things to my personal container registry. This isn't

|

||||||

|

anything special, just [[https://docs.docker.com/registry/deploying/][Docker's own registry server]] which I can push whatever images I want and

|

||||||

|

pull them to whatever machine I need, provided said machine is connected to the tailnet.

|

||||||

|

Thankfully Tailscale is trivial to use in CI/CD pipelines, so I don't ever have to expose

|

||||||

|

this registry to the wider internet.

|

||||||

|

|

||||||

|

Also at home I have my media library, which runs off a Raspberry Pi 4b connected to a 4TB external

|

||||||

|

hard drive. This is the first thing that will be moved to the NAS being built, as it can struggle

|

||||||

|

with some media workloads. It hosts an instance of [[https://jellyfin.org/][Jellyfin]] for watching things back, but I tend

|

||||||

|

to use the exposed network share instead, since the Pi can sometimes struggle to encode video

|

||||||

|

to serve through the browser. Using it as a "dumb" network share is mostly fine, but you do

|

||||||

|

lose some of the nice features that come with a more full featured client, like resuming playback

|

||||||

|

across devices or a nicer interface for picking what to watch. There's really nothing much more

|

||||||

|

to say about this Pi. When the NAS is built, the work it does will be moved to that, and the k3s

|

||||||

|

configuration currently running on my Pi 3b will move to it. At that point it's likely I'll

|

||||||

|

actually cluster the two together, depending whether I find another workload for it.

|

||||||

|

|

||||||

|

Over in the datacenter world, I have a few things running that are slightly less critical. For

|

||||||

|

starters, I rent a 1TB storage box from [[https://www.hetzner.com][Hetzner]] for backing things up off-site. Most of it is just

|

||||||

|

junk, and I really should get around to sorting it out, but there's a lot of files and directories

|

||||||

|

and it's easier to just lug it around (I say that, it might actually be easier to just remove

|

||||||

|

most of it since I rarely access the bulk of it). This is also where backups of my Minecraft server

|

||||||

|

are sent to on a daily basis. This Minecraft server runs on [[https://www.oracle.com/uk/cloud/free/][Oracle Cloud's free tier]], specifically

|

||||||

|

on a 4-core 12GB ARM based server. It performs pretty well considering it's only really me and my

|

||||||

|

girlfriend playing on the server, and while I may not be the biggest fan of Oracle, it doesn't

|

||||||

|

cost me anything (I do keep checking to make sure though!). Also running on Oracle Cloud is an

|

||||||

|

instance of [[https://github.com/louislam/uptime-kuma][Uptime Kuma]], which is a fairly simple monitoring tool that makes requests to whatever

|

||||||

|

services I need to keep an eye on every minute or so. This runs on the tiny AMD-based server

|

||||||

|

the free tier provides, and while I ran into a bit of trouble with the default request interval

|

||||||

|

for each service (it's currently monitoring 12 different services), randomising the intervals

|

||||||

|

a bit seems to have smoothed everything out.

|

||||||

|

|

||||||

|

Among the services being monitored is a small project I'm working on that is currently hosted

|

||||||

|

on a Hetzner VPS. This VPS is also running k3s, and serves up [[https://mc.gmem.ca][mc.gmem.ca]] while I work on the beta

|

||||||

|

version. The setup powering it is fairly straightforward, with a Kubernetes deployment pulling

|

||||||

|

images from my container registry, the container image itself being built and pushed with

|

||||||

|

[[https://sourcehut.org/][sourcehut]]'s build service. Originally, I tried hosting this on the same server as the Minecraft

|

||||||

|

server, but despite being able to build images for different architectures, it proved very slow

|

||||||

|

and error prone, so I opted to instead grab a cheap VPS to host it for the time being. I don't

|

||||||

|

forsee the need to scale it up anytime soon, but it will be easy enough to do.

|

||||||

|

|

||||||

|

A fair number of services I deploy or write rely on SQLite as a datastore, since I don't see much

|

||||||

|

point in deploying/maintaining a full database server like Postgres, so I've taken to playing

|

||||||

|

around with [[https://litestream.io/][Litestream]], which was recently "aquired" by Fly.io. This replicates over to the

|

||||||

|

aforementioned Hetzner storage box, and I might add a second target to the configuration for

|

||||||

|

peace of mind.

|

||||||

|

|

||||||

|

Speaking of Fly.io, I also leverage that! Mostly as an experiment, but I did have a valid

|

||||||

|

use case for it as well. My girlfriend does comissions for VRChat avatars, and needed a place to

|

||||||

|

showcase her work. I opted to build out a custom headless CMS and simple frontend (with Go and

|

||||||

|

SvelteKit, respectively) to create [[https://artbybecki.com/][Art by Becki]]. I'm no frontend dev, but the design is simple

|

||||||

|

enough and the "client" is happy with it. The frontend itself is hosted on CloudFlare Pages (most

|

||||||

|

of my sites or services have their DNS managed through CloudFlare), and images are served from

|

||||||

|

Backblaze B2. I covered all this in my previous post [[/posts/creating-an-artists-website/][Creating an Artist's Website]] so you

|

||||||

|

can read more about the site there. My own website (and this blog) is hosted with GitHub Pages,

|

||||||

|

so nothing to really write about on that front.

|

||||||

|

|

||||||

|

And with that, I think that's everything I currently self host, and how. I'm continuing to refine

|

||||||

|

the setup, and my current goals are to build the NAS I desperately need and find a proper solution

|

||||||

|

for writing/maintaining a personal knowledgebase. Be sure to either follow me on Mastodon [[https://tech.lgbt/@arch][tech.lgbt/@arch]]

|

||||||

|

or Twitter [[https://twitter.com/gmem_][twitter.com/gmem_]]. I'm sure I'll have a followup post when I finally get my NAS built

|

||||||

|

and deployed, with whatever trials and tribulations I encounter along the way.

|

||||||

57

posts/diy-api-documentation.md

Normal file

57

posts/diy-api-documentation.md

Normal file

|

|

@ -0,0 +1,57 @@

|

||||||

|

---

|

||||||

|

title: DIY API Documentation

|

||||||

|

date: 2016-02-24

|

||||||

|

---

|

||||||

|

|

||||||

|

#### How difficult can writing my own API doc pages be?

|

||||||

|

|

||||||

|

I needed a good documentation solution for

|

||||||

|

[NodeMC](https://nodemc.space)'s

|

||||||

|

RESTful API. But alas, I could find no solutions that really met my

|

||||||

|

particular need. Most API documentation services I could find were

|

||||||

|

either aimed more towards web APIs, like Facebook's or the various API's

|

||||||

|

from Microsoft, very, very slow, or just far too expensive for what I

|

||||||

|

wanted to do (I'm looking at you,

|

||||||

|

[readme.io](http://readme.io)). So,

|

||||||

|

as I usually do, I decided to tackle this issue myself.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

I knew I wanted to use Markdown for writing the docs, so the first step

|

||||||

|

was to find a Markdown-to-HTML converter that I could easily automate

|

||||||

|

for a smoother workflow. After a bit of research, I came along

|

||||||

|

[Pandoc](http://pandoc.org/), a

|

||||||

|

converter that does pretty much everything I need, including adding in

|

||||||

|

CSS resources to the exported file. Excellent. There is also quite a few

|

||||||

|

integrations for several Markdown (or text) editors, but none for vsCode

|

||||||

|

so I didn't need to worry about those, choosing instead to use the \*nix

|

||||||

|

watch command to run my 'makefile' every second to build to HTML.

|

||||||

|

|

||||||

|

The next decision I had to make was what to use for CSS. I was very

|

||||||

|

tempted to use Bootstrap, which I have always used for pretty much all

|

||||||

|

of my projects that I needed a grid system for. However, instead, I

|

||||||

|

decided on the much more lightweight

|

||||||

|

[Skeleton](http://getskeleton.com/)

|

||||||

|

framework, which does pretty much everything I need to in a much smaller

|

||||||

|

package. Admittedly it's not as feature-packed as Bootstrap, but it does

|

||||||

|

the job for something that is designed to be mostly text for developers

|

||||||

|

who want to get around quickly. Plus, it's not too bad looking.

|

||||||

|

|

||||||

|

So the final piece of the puzzle was "how can I present the information

|

||||||

|

attractively?", which took a little bit more time to figure out. I

|

||||||

|

wanted to do something like what most traditional companies will do,

|

||||||

|

with a sidebar table of contents, headers, etc. The easiest way to do

|

||||||

|

this was a bit of custom HTML and a handy bit of Pandoc parameters, and

|

||||||

|

off to the races.

|

||||||

|

|

||||||

|

Now at this point you're probably wondering why I'm not just using

|

||||||

|

Jekyll, and the answer to that is... well, I just didn't. Honestly I

|

||||||

|

wanted to try to roll my own Jekyll-esque tool, which while slightly

|

||||||

|

less efficient still gets the job done.

|

||||||

|

|

||||||

|

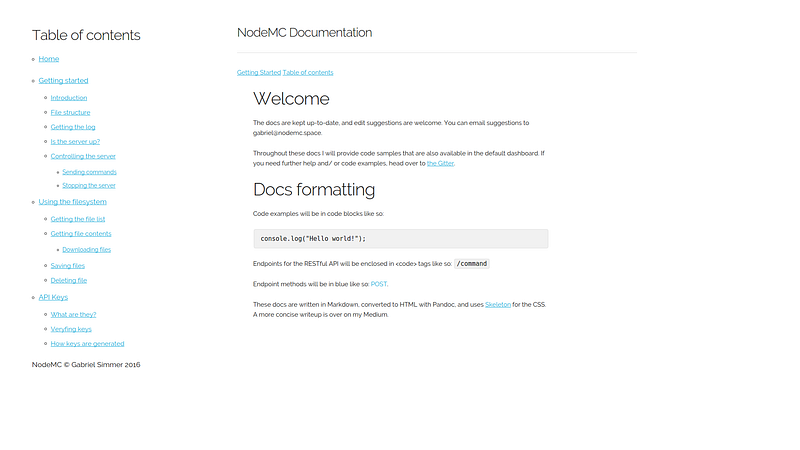

So where can you see these docs in action? Well, you can view the

|

||||||

|

finished result over at

|

||||||

|

[docs.nodemc.space](http://docs.nodemc.space), and the source code for the docs (where you can make

|

||||||

|

suggestions as pull requests) is available on [my

|

||||||

|

GitHub](https://github.com/gmemstr/NodeMC-Docs), which I hope can be used by other people to build

|

||||||

|

their own cool docs.

|

||||||

58

posts/emacs-induction.org

Normal file

58

posts/emacs-induction.org

Normal file

|

|

@ -0,0 +1,58 @@

|

||||||

|

#+title: Emacs Induction

|

||||||

|

#+date: 2021-09-01

|

||||||

|

|

||||||

|

*** Recently, I decided to pick up Emacs.

|

||||||

|

|

||||||

|

/sidenote: this is my first post using orgmode. so apologies for any weirdness./

|

||||||

|

|

||||||

|

I've always been fascinated with the culture around text editors. Each one is formed

|

||||||

|

of its own clique of dedicated users, with either a flourishing ecosystem or floundering

|

||||||

|

community (see: Atom). You have the vim users, swearing by the keyboard shortcuts,

|

||||||

|

the VSCode users, pledging allegiance +to the flag+ Microsoft and Node, the Sublime

|

||||||

|

fanatics, with their focused and fast editor, and the emacs nerds, living and breathing

|

||||||

|

(lisp). And all the other editors and their hardcore users (seriously, we could spend

|

||||||

|

all day listing them). And the fantastic thing is, all of them (except Notepad) are

|

||||||

|

perfectly valid options for development. Thanks to the advent of the Language Server

|

||||||

|

Protocol, most text extensible editors can be turned into competent code editors (not

|

||||||

|

neccesarily IDE replacements, but good enough for small or day to day use).

|

||||||

|

|

||||||

|

Up until recently, I've been using Sublime. It's a focused experience with a very small

|

||||||

|

development team and a newly revived ecosystem, and native applications for any platform

|

||||||

|

I care to use. I've used VSCode, Atom, and Notepad++ previously, but never really delved

|

||||||

|

much into the world of "text based" (for lack of better term?) editors, including vim

|

||||||

|

and emacs. The most exposure was using nano for quick configuration edits on servers or

|

||||||

|

vim for git commit messages. Emacs evaded me, and I had little interest in switching

|

||||||

|

away from the editors I already understood. But as I grew as a developer and explored

|

||||||

|

new topics, including Clojure and Lisps in general, I quickly realized that to go further

|

||||||

|

I would need to dig deeper into more foreign concepts and stray from the C-like languages

|

||||||

|

I was so comfortable with. The first few days at CircleCI, I was introduced to [[https://clojure.org/][Clojure]],

|

||||||

|

and I quickly grew more comfortable with the language and concepts (although I am

|

||||||

|

nowhere near experienced enough to write a more complete application), and I have that

|

||||||

|

to thank for my real interest in lisps.

|

||||||

|

|

||||||

|

Several failed attempts later, I managed to get a handle on how Guix works on a surface

|

||||||

|

level. My motivation for this was trying to package Sublime Text, which, while I make

|

||||||

|

significant progress, I hit some hard blockers that proved tough to defeat. This

|

||||||

|

sparked me to invest time into emacs, the operating system with an okay text editor.

|

||||||

|

For a while, leading up to this, I've subscribed and consumed [[https://www.youtube.com/c/systemcrafters][System Crafters]], an

|

||||||

|

excellent resource for getting started with emacs configuration (among other related

|

||||||

|

topics). It was part of my inspiration to pick up emacs and play around with it - I don't

|

||||||

|

typically enjoy watching video based tutorials, especially for programming, but thanks

|

||||||

|

to the livestreamed format presented it was much easier to consume.

|

||||||

|

|

||||||

|

So far, I'm enjoying it. Now that I have more of a handle on how lisps work, it's a much

|

||||||

|

smoother experience, and I do encourage developers to exit their comfort zone of C-like

|

||||||

|

languages and poke around a lisp. There's a learning curve, for sure, but the concepts

|

||||||

|

can be applied to non lisp languages as well. The configuration for my emacs setup is

|

||||||

|

(so far) relatively straightforward, and I haven't spent much time setting it up with

|

||||||

|

language servers or specific language modes, but for writing it's pretty snappy (and

|

||||||

|

pretty). [[https://orgmode.org][Orgmode]] is a very interesting experience coming from being a staunch Markdown

|

||||||

|

defender, but it's not a huge adjustment and the experience with emacs is sublime. It's

|

||||||

|

also usable outside of emacs, although I can't speak to the experience, and GitHub

|

||||||

|

supports it natively (and Hugo, thank goodness). [[https://justin.abrah.ms/emacs/literate_programming.html][Literate programming]] also seems like

|

||||||

|

a really neat idea of blog posts and documentation, and I might switch my repository

|

||||||

|

READMEs over to it for things like configuration templates. These are still early days

|

||||||

|

though - I've only been using emacs for a few days and am still working out where it

|

||||||

|

fits in to my development workflow beyond markdown/orgmode documents.

|

||||||

|

|

||||||

|

/sidenote: emacs or Emacs?/

|

||||||

38

posts/enjoying-my-time-with-nodejs.md

Normal file

38

posts/enjoying-my-time-with-nodejs.md

Normal file

|

|

@ -0,0 +1,38 @@

|

||||||

|

---

|

||||||

|

title: Enjoying my time with Node.js

|

||||||

|

date: 2015-12-19

|

||||||

|

---

|

||||||

|

|

||||||

|

#### Alternatively, I found a project to properly learn it

|

||||||

|

|

||||||

|

A bit of background -- I've been using primarily PHP for any backend

|

||||||

|

that I've needed to do, which while works most certainly doesn't seem

|

||||||

|

quite right. I have nothing against PHP, however it feels a bit dirty,

|

||||||

|

almost like I'm cheating when using it. I didn't know any other way,

|

||||||

|

though, so I stuck with it.

|

||||||

|

|

||||||

|

Well I recently found a project I could use to learn Node.js -- a

|

||||||

|

Minecraft server control panel -- and I've actually been enjoying it,

|

||||||

|

much more than I have PHP. Here's a demo of my project:

|

||||||

|

|

||||||

|

https://www.youtube.com/embed/c0IGKEmHyOM?feature=oembed

|

||||||

|

|

||||||

|

It's all served (very quickly) by a Node.js backend, that wraps around

|

||||||

|

the Minecraft server and uses multiple POST and GET routes for various

|

||||||

|

functions, such as saving files. The best part about it is how fast it

|